Lane Detection and Following System

- Real-time lane marking detection

- Canny edge detection and Hough transform

- Curve fitting and road curvature estimation

- Dynamic lane centering logic

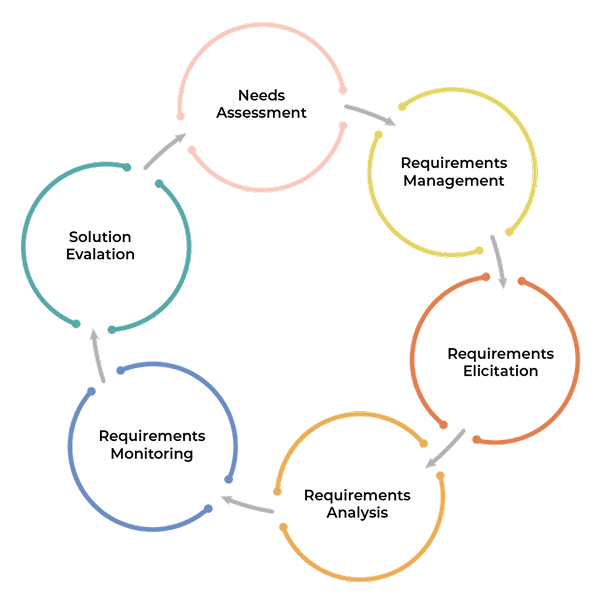

This project aims to design and develop a self-driving car system using artificial intelligence, computer vision, and deep learning. The system enables a vehicle to navigate autonomously in a simulated or real-world environment, understanding its surroundings, following road rules, and making decisions like a human driver. Students will implement key technologies such as object detection, lane tracking, traffic sign recognition, path planning, and real-time control systems.

100000+ uplifted through our hybrid classroom & online training, enriched by real-time projects and job support.

Come and chat with us about your goals over a cup of coffee.

2nd Floor, Hitech City Rd, Above Domino's, opp. Cyber Towers, Jai Hind Enclave, Hyderabad, Telangana.

3rd Floor, Site No 1&2 Saroj Square, Whitefield Main Road, Munnekollal Village Post, Marathahalli, Bengaluru, Karnataka.